What incremental innovation looks like

My strongest memory of innovation? An A4 piece of paper.

An old school friend of mine was the first in our class with internet access – a magic portal to another world. She’d taken advance requests of pictures of our favourite bands to print. As she generously dished out the pages, we each took our pile and pored over the tiny, overly pixelated images. You had to look from afar. Otherwise, they were just smudged coloured blobs. That didn’t bother us. It wouldn’t be long until I had the luxury of my own dalek-style dial-up broadband to behold all those treasured visuals for myself.

Innovation reframes connection, social and cultural perception and the art of what’s possible. It raises the bar. Done well, it inspires and challenges us to excel. It improves the quality and value of our services. As consumers, it makes parts of life that much smoother (no-one comes between me and my air fryer).

Now for the existential elephant in the room. Not all innovation is responsible or, to be specific, used responsibly. History’s shown us how highly innovative technology can both elevate and devastate. Even the outputs of well-intentioned innovation can be easily adapted for malicious intent such as threats, coercion and intimidation. It’s a heavy subject and I could discuss it at length. Instead, to keep this blog concise and avoid making you all miserable, I’m focusing for now on the opportunities innovation offers us in comms.

Innovation is one of those overused words that risks numbing us to its meaning. As exciting as radical innovation is, it’s important to also consider incremental innovation – the power of gradual steps towards improvement borne of creative thinking, collaboration and ambition. Here are some tangible ways to achieve that in Strategic Comms.

Automated dashboards

An underrated must. Make sure you’re producing that weekly/monthly or ad hoc report in minutes not hours. You can then dedicate more time to data checks and generating high-quality insight. User-friendly, automated dashboards are so efficient, for evaluators and their audiences. Anyone who, like me, has felt the pain of manual chart formatting or broken PowerPoint links knows this all too well.

Current fans of data visualisation tool Tableau might know Salesforce is incorporating generative AI in its upcoming offers Tableau GPT and Tableau Pulse, with more hinted to come. Likewise, Microsoft is expanding its product line with AI-assisted tech, including data and insights products. Government departments often require a business case for using new or updated software, to ensure taxpayer value for money. If this applies to you, your rationale for using such tools, if you don’t already, could broadly include:

- Greater transparency of data

- Easier benchmarking and competitor comparison

- Stronger trend and anomaly recognition

- Interactive, easily shareable

Experiment

Test and learn is, for me, one of the most enjoyable parts of digital evolution. As comms experts, we’re learning as we go, as are the platforms themselves. (Case in point – Open AI’s u-turn on Browse with Bing, which reportedly allowed users to bypass paywalled websites). While social media’s latest disrupter Threads can celebrate gaining 10 million users in seven hours, it’s fared less well on the accessibility front. Monitoring development of accessibility features and their visibility is an important part of your test and learn. Think about how any changes could impact your audience.

Consider the effect of AI, new technologies and channels on your A/B testing and wider research. How are your media buying, planning and research agencies adapting their methodologies towards more user-centred content creation?

Microsoft’s ChatGPT-4, Google’s Bard and relative newcomer Claude 2 are among platforms radically changing our development of content, be it campaign copy, press releases, OASIS or wider strategy documents. If you’re in any doubt, an excellent social post by Chase Dimond gives tips on how to leverage the power of ChatGPT to fundamentally transform your ways of working. Here’s a selection of the tips:

2. Write hypnotising email hooks

Develop [number] email subject lines for our upcoming newsletter that will encourage high open rates and engagement.

Context: Target audience – [your target audience here]

What our newsletter covers – [your newsletter content here]

Email goes – [your email goals here]

Post successful subject lines:

“[subject line example one]”

“[subject line example two]”

“[subject line example three]”

Formatting guidelines:

“[your formatting guidelines here]”

5. Duplicate your tone of voice

Analyse the tone, style and wording in the following text: [text]

Then use NLP and hypnotic wording to write a caption in the same style about [topic].

7. Create a profitable ad campaign

I want you to act as an expert in paid social media marketing. You will create a campaign to promote [product/service] for [audience].

You will choose the most suitable demographic, slogans, media channels, budget and related activities. I will be your connection to the human world.

The goal is to generate a ROAS of at least 5x. You have a starting budget of [budget]. What should be the first ads we start running?

As a comms strategist, I found the ‘create a profitable ad campaign’ prompt particularly humbling. It’s a brilliant example of how to use ChatGPT in a sophisticated way to ensure high-quality outputs. To preface his post, Dimond shared CEO of Nvidia’s view that “AI won’t take your jobs, people who know how to use AI will…”. It’s a catchy soundbite. Not everyone will be convinced. It’s natural to think about ways in which tech could adversely affect our jobs in the future. And perhaps naïve to not consider it at all. As for me, I’m finding it energising to be experiencing the changes as they happen. There’s lots of informative online resources to help us use these tools to their maximum potential, including asking the tools themselves.

While concern over job losses is understandable, it’s worth remembering that AI isn’t (yet) attuned to foreseeing the impact on comms of political, economic and social change. By this, I mean the kind of sensitivities that mean you need to refine a line of copy due to ongoing social debate on a campaign-related issue. Or re-structure a particular narrative because you’re noticing a shift in what’s important to your audience. Or suddenly pause that planned ad partnership because of a reputational issue. Knowledge and monitoring of these subtle interdependencies requires your human judgement, risk management and strategic planning.

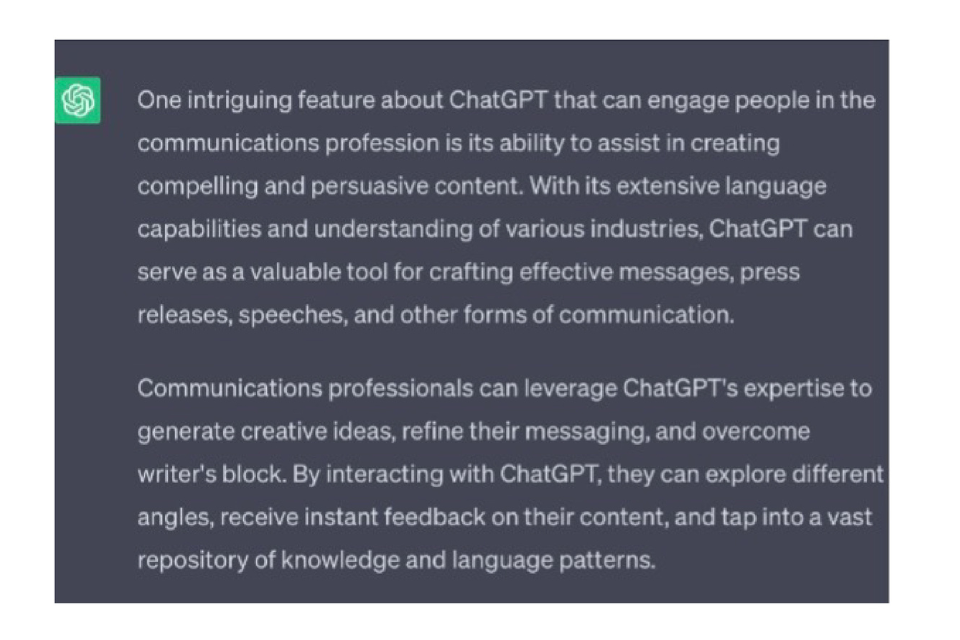

I asked ChatGPT to share an intriguing feature about itself. My initial scepticism was swiftly swept aside by its relevant, concise and seconds-long response (see screenshot above). After exploring further, I quickly learned how much possibility the tool offered. Mind you, I did only ask for a (singular) feature and it gave me 20. Show-off.

AI-image generator DALL-E 2, an equivalent game-changer in the design space, rethinks artistic possibility. What ChatGPT is to words, DALL-E 2 is to campaign imagery and design.

To what extent are your teams exploring this software? What is your organisation’s understanding of the impact of inputting information on domains used by these sites? (see ‘Ethics’ point further down). Learning how your colleagues are interacting with these tools now will help assess future levels of ability, confidence and knowledge.

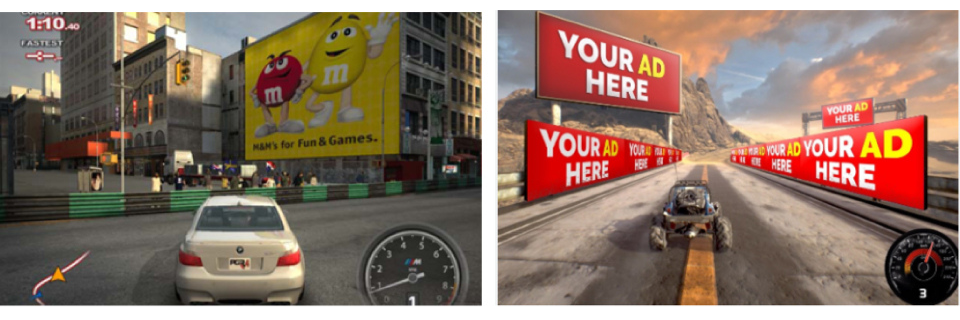

DIGA, or Dynamic In-Game Advertising is another avenue to explore where, as The Drum explains “…adverts appear inside a 3D game environment, on virtual objects such as billboards, posters, and bus stops…”. The site has a series packed full of tips on DIGA. Importantly, it recommends marketers recognise in-game advertising as a community rather than a channel – an important distinction from the more traditional perception of media opportunities.

Review your data frameworks

As with any accelerated development of technology, privacy, ethics and accessibility is important. While there’s plenty of praise for Open AI, it’s faced valid criticism for potential data security breaches due to its multi-server storage and public access.

In the education sector, authenticity is an emerging challenge. Companies such as Winston AI and Turnitin are among AI-detection solutions helping educators identify ChatGPT-produced essays, amid concerns of student ‘plagiarism’. A GCS session on the uses and limitations of Large Language Models (for GCS members-only) touched on this. Speakers GCS Chief Executive Simon Baugh and GCHQ’s David C were optimistic about what Open AI offers while being alert to errors. In one example, rich detail and well-cited references within an AI-generated response seemed accurate at first glance. Closer inspection revealed factual mistakes, reinforcing the importance of human-led quality control.

Just as we wouldn’t take everything we hear at face value, no matter how confident or assured the delivery, it’s helpful to consider AI-assisted tech in the same way. Check your conformation bias when reviewing the information and seek wider perspectives. The Equality and Human Rights Commission’s take on Artificial Intelligence in Public Services is a handy resource here: “Discrimination may happen because the data used to help the AI make decisions already contains bias. Bias may also occur as the system is developed and programmed to use data and make decisions. This process is often referred to as ‘training’ the AI. The bias may result from the decisions made by the people training the AI. Sometimes the bias may develop and accumulate over time as the system is used”.

Disinformation is even more important for us in comms, given the rising sophistication of visual, audio and real-time video Deepfakes, powered by AI offshoot Deep Learning. On the extreme end of this, is the transposition of profile photos onto pornographic content. This worrying, ongoing abuse of innovation, can easily be used as a means of coercive control. Whilst planned changes to the UK’s online safety bill provides a deterrent, the pace of technology, user anonymity and sophistication of Deepfakes makes regulation difficult.

All this stresses our responsibility to communicate authentically to continue building trust in our services. Does that mean labelling each piece of AI-generated copy as such? Perhaps, in the short-term. Or until AI becomes a norm rather than a novelty. Consider any ethical frameworks you can start evolving now to save time later:

- What are your existing comms-related data privacy and ethics regulations?

Check out the current official guidance and these helpful tools Guidance to civil servants on use of generative AI and the Data Ethics Framework. - Who will you consult to adapt them? Speak to colleagues in legal, ethics and compliance teams for initial guidance. If you can, involve AI experts alongside Strategic Communication specialists.

- Do you know your organisation’s plans to engage with digital transformation? Given the current fast pace of tech developments, little and often updates might work in your favour.

When adapting to innovation, remember that human minds developed the changes we’re experiencing now. Their trajectory from concept to execution involved a lengthy process of design, test, implement, evaluate and refine. It’s important we apply this same approach to their adoption within our comms. Keep an open mind, think creatively and stay curious.

Disclaimer – no robots were involved in the production of this blog.

Further resources:

Responsible innovation:

- GCS Horizon Review 2023 – GCS (civilservice.gov.uk) (GCS members-only access)

- UK government resources on artificial intelligence

Automated dashboards:

- How Tableau GPT and Tableau Pulse are reimagining the data experience

- Salesforce puts generative AI into Tableau, gives Big Data the gift of gab (techrepublic.com)

- Review: Microsoft Dynamics 365 Can Help Governments Serve Their Communities | StateTech Magazine

Deepfakes:

- New laws to better protect victims from abuse of intimate images – GOV.UK (www.gov.uk)

- As Deepfakes Flourish, Countries Struggle With Response – The New York Times (nytimes.com)

Digital in game Advertising (DIGA):

- The Drum | ‘Brands Are Guests In That Space’: Gaming Isn’t A Channel; It’s A Community

- The Drum | Top 3 Predictions For The Future Of In-game Advertising

- The Drum | Gaming Advertising