GCS Innovating with Impact Strategy

Applying responsible innovation and use of data to transform government communication

Table of Contents

- Introduction

- The foundations of our strategy

- Empowering responsible innovation

- Creating a culture of innovation across GCS

- Delivering new tools and platforms

- Delivery roadmap

Introduction

The current revolution in artificial intelligence (AI) is poised to be as transformative as the Industrial Revolution. Our world is being shaped by rapid technological advancements and an evolving communication landscape. We want and need to seize the new opportunities this brings to transform government communications, for public good.

The role of effective government communication has never been more crucial. The ability to connect with the public, engage in meaningful experiences and provide access to vital information is at the heart of our purpose. However, the emergence of new technologies, such as generative AI, have brought with them fresh challenges and raises important ethical questions.

The Government Communication Service (GCS) wants to be a leader in innovative use of technologies and data, to drive better effectiveness and impact for the public good. We want to embrace new ideas and ways of working to meet the challenges of today and on the horizon, such as:

- How do we ensure government communications can benefit from the advances in AI, in a way that does not conflict with our ethical standards?

- How can we better harness our data in a privacy-first way to unlock new insights and better reach diverse communities?

- How will we tackle the impact of realistic synthetic content on the public’s trust in government messaging?

Embracing emerging technologies and innovative initiatives is how we will meet these challenges, and improve our collective effectiveness and efficiency.

What does this mean if we are successful? If we consider responsible use of AI alone, external studies have shown that multidisciplinary teams which successfully integrate generative AI into their work can deliver high quality results with greater consistency, and quicker than would be otherwise possible. Just a 10% improvement in our efficiency could unlock thousands of work hours each year across GCS, enabling us to be more productive. If we reduce the work it takes for us to produce high-quality first draft communications, such as tv and radio scripts, social media copy, assets, and more, AI could potentially save us millions of pounds each year, driving better value for money for taxpayers.

The aim of this strategy is to set out how every GCS member can seize the opportunities of the revolution in technology, analysis, research and data, to dramatically improve the impact of government communications.

The effective and responsible use of new technology and data can help us create more relevant, interesting and engaging communications, which are responsive to the public’s needs.

The rapid changes within the communications and marketing landscape, driven by emerging technologies such as AI, also enable us to be more productive. This will free us from many repetitive and time-consuming tasks, and open up new opportunities for us to develop new skills and a greater number of career paths.

“Technological advances are creating new possibilities for public communications. This strategy aims to ensure the Government Communication Service stays at the forefront in this changing landscape. We want GCS members to feel empowered to rapidly adopt new technologies, with knowledge, ambition, and ethical values guiding the way. By combining the latest innovations with our long-standing public service principles, we can deliver communications that are truly world-leading.”

Simon Baugh, Chief Executive, Government Communication Service

This Innovating with Impact Strategy brings together the steps we have already taken since the publication of the GCS 2022-2025 Strategy Performance with Purpose, and outlines the next steps we will collectively take, to transform government communications for public good.

Figure 1 in the strategy provides an overview of the core elements which make up GCS’s ecosystem of innovation and data initiatives.

Encourage, identify, test and scale across HMG

10% innovation fund

- Encourage departments and ALBs to spend up to 10% campaign budget on innovative techniques to pilot, test and learn from.

Innovation Hub

- Identify the most promising use of new tech, tested in campaigns, and scale across the GCS.

Project Spark!

- Dragon’s Den-style competition to encourage and support innovation across the GCS.

Specific projects delivered by central function

Custom GCS AI co-pilot

- Creating a private and secure conversational AL tool for GCS members, tailored to government communications use cases.

Audience segmentation

- A digital audience segmentation tool for planning and evaluation.

Personalisation

- Harnessing our first-party data, scaling interactive tools like AI chatbots, and working with GOV.UK to improve user journeys.

Underpinned by

Propriety and ethics

- Confidence to make decisions.

GCS Connect

- Network for sharing innovation.

GCS Advance

- Step-change in skills.

The foundations of our strategy

In 2023, GCS developed and wrote the Horizon Review into Responsible Innovation report, together with the Responsible Technology Adoption Unit (RTA). It summarises our research into the emerging technologies and uses of data in the marketing and communications industry. It also identified the important ethical considerations these bring, and set out recommendations for government communications.

It provides all members with a common understanding of the changes our industry faces, and the opportunities and risks these pose. This was then built upon in a series of workshops with industry partners, government communicators from a wide range of departments, arm’s-length bodies (ALBs), and agencies.

The findings from this research and engagement form the basis of this strategy.

Definition of innovation within GCS

To embrace innovation together as one communication service, we first need to define what we mean by ‘innovation’ for government communications. The purpose of innovating is to drive better effectiveness and impact of our work, for the public good.

GCS, however, is a diverse community and delivers a broad range of communications activity across hundreds of organisations to millions of people.

We collaborated with generative AI and then built on this together with GCS members to develop a shared definition of innovation unique to government communications.

For Government Communications, innovation is defined as doing something new that drives value within one’s local organisation or area of work.

Three core pillars of the strategy

To deliver on the mission to drive better effectiveness and impact, our Innovating with Impact Strategy is structured around three core pillars.

1. Empowering responsible innovation in government communications.

- Placing our ethical values at the heart of our approach, alongside data protection, security and privacy, and developing tools to give all GCS members confidence to explore new approaches and minimise risk.

2. Creating a culture of innovation across GCS through encouraging, identifying, testing and scaling innovative ideas.

- This will be supported by strong networks for sharing innovation and fostering collaboration, and building the skills to confidently innovate and responsibly harness data.

3. Delivering new tools and platforms at the centre for the benefit of all government communicators.

- This includes the development and roll out of Assist, a conversational AI tool for GCS members, tailored to government communications use cases.

In an era where trust in public institutions is low, and emerging technologies are transforming our world before our eyes, we all have an important role to play.

This strategy sets out how we will harness rapid technological changes for the public good, deliver a more efficient and effective GCS, and strengthen public trust in government communications.

Empowering responsible innovation

Often innovative technologies or new approaches are solely assessed against their impact in boosting our effectiveness or efficiency. This isn’t enough anymore.

New technologies and means of communication are raising new ethical considerations for government communications to address. In particular, issues around accuracy, bias, transparency, privacy and security.

Acting transparently and responsibly in our roles is also crucial to ensure that GCS continues to protect privacy, promote accountability, prevent misuse of information, and ensure that our use of new advancements benefit society.

We need to ensure that we are considering all of these issues as we innovate, applying our values and principles in order to innovate ethically in this era of rapid change.

We will be guided by our ethical standards

Placing ethics at the heart of our approach to innovation requires us to clearly set out the safeguards we will put in place when adopting new technologies. We also need to make it as easy as possible for all government communicators to apply those safeguards in their role.

For an ethical approach to be effective, it also needs to reflect our priorities and what is important to us as government communicators. GCS has an abundance of existing ethical principles established in legislation, regulation and our own guidance, as well as rules on how to assess value for money in campaigns or how to comply with privacy and data protection regulations. However, it is not always clear how these should be applied in our day-to-day roles in order to innovate responsibly.

Last year, GCS worked with the Responsible Technology Adoption Unit (RTA), and the Head of Ethics at our cross-government media buying agency, to map our values and identify how they can practically be applied to the challenges we face in embracing new technologies and uses of data.

This work highlighted the breadth and depth of ethical values, principles, and codes of conduct we have to guide our delivery of government communications. In order to navigate and implement these effectively in our roles, the first step is to structure these into a hierarchy that is easy for us to understand and apply.

British values such as Democracy, the Rule of Law, and Individual Liberty are the foundations of our society, and are therefore the first principles we should consider. For example, adhering to our Democratic values means we should always act in a transparent manner, and be able to fully explain or justify an approach or how a decision was made to our Minister(s). This is especially important when it comes to our use of generative AI.

These are then built upon by the Civil Service Code, which sets out our core values of integrity, honesty, objectivity, and impartiality. For example, honesty is essential for maintaining trust in democratic institutions, and we strengthen this through communicating our actions transparently.

Next, the GCS code of conduct governs how the government communicates with the public. For example, government communications have to be accurate and truthful, ensuring that all information presented to the public is reliable and factually accurate. Therefore, this is especially important for our use of innovative technologies like generative AI. We need to do so in a way that upholds accuracy, inclusivity and mitigates biases.

To enable all government communicators to more easily navigate and apply these principles in their roles, GCS has developed a Framework for Ethical Innovation.

A first for government communications, it is designed to support communicators across government to navigate, understand, and weigh our legal obligations, proprietary guidance, and policies on specific topics, such as the Generative AI Framework for HMG. It is designed to be a structured process which makes it easier for members to follow and guide their use of emerging technologies and data.

The framework clearly sets out safeguards we will adopt to assess new technologies, and aims to provide government communicators with the confidence that their approach is in line with our ethical standards.

Often, new ideas are not 100% ethical or unethical. This framework is designed to help all members identify what they should be considering from the outset of their innovative work, and guide thinking using our values, to make the best decisions possible.

The framework is already being successfully applied by the central GCS team, supporting them to evaluate whether AI technologies available in the marketplace are appropriate for use in government communications. In a recent example, we explored whether an AI tool for assessing diversity in communications content in the US could be effectively applied within a UK context. By using the new ethical framework to help guide our exploration, it was clear the technology was not yet mature enough to avoid perpetuating bias in its results, and so not yet suitable for use in government communications.

We will proactively engage and adapt our approach

To keep pace with our rapidly evolving world, our approach will be a ‘living model’. We will regularly adapt it to keep in line with emerging technologies, risks, thought leadership, and official government guidance.

From April 2024, we will begin piloting the framework with an initial cohort of teams across GCS, with the aim of refining it so that it is as practical and helpful as possible. In parallel, we will invite stakeholders from civil society, industry, and academia to review, with the aim of further enhancing it over time, in ways appropriate to our purpose.

In parallel, in 2024 we will incorporate many of the learnings gathered during development into an updated version of the GCS SAFE Framework for standards in digital brand safety for HM Government advertising. This will ensure GCS members can continue to confidently apply our high standards of brand safety to protect government communications. Our ambition is to also maintain the interested public’s trust in government communications, by demonstrating that our approach is keeping pace with the continually evolving landscape of digital platforms.

To protect public trust in government communications, they should know how we are using technology and data, and this should be communicated in increasingly clear ways. Existing mechanisms such as Privacy Notices can often be difficult for non-expert audiences to follow, and understand their impact. To this end, the central GCS team will test and evaluate new ways to communicate clearly, in plain language, how we use technology and data to deliver government communications. For example, this could include:

- Testing privacy summaries or highlights: providing condensed versions of the main points from a privacy policy, to help the interested public quickly understand the most important aspects of our data handling practices. These summaries could include simple bullet points, infographics, or a brief video that outlines key points such as what information is collected, how it’s used, and a user’s rights concerning their data.

- Testing layered notices: these present privacy information in layers that allow the public to delve deeper into the details as needed. The first layer might be a very brief overview, with additional layers providing more comprehensive information. This can be done through the use of expandable sections on a webpage, a series of web pages with increasing levels of detail, or a multi-page digital booklet that the public can navigate through at will.

This activity will aim to ensure the interested public is able to better understand what types of data we use to drive our communications, and why, as we explore innovative new opportunities in this area. In this work we will continue to be guided by existing best practices, for example, those laid out in the Algorithmic Transparency Recording Standard (ATRS) for public sector bodies.

This will also include outlining the existing safeguards we have put in place, such as our ‘Least Data by Default’ approach. This approach continues to guide our use of data: that we use it only when it is necessary, and when it is justified to do so in order to provide public good, and value for the taxpayer. This approach is outlined in greater detail in section 4.

With transparency comes scrutiny. As Civil Servants, we are accountable through our democratically elected Ministers and the UK Parliament. We also recognise that the societal impact of current technological innovation means that the wider industry, academia, and civil society are increasingly engaged in the conversation.

To ensure we consider a diverse range of perspectives on issues relevant to government communications, GCS will engage with bodies such as the Central AI Governance Board at the Cabinet Office and the new AI Safety Institute, to inform, review, and guide our approach to adopt new technologies such as generative AI.

The purpose will be to uphold our values and ethical principles, and maintain public trust in government communications. We must guard against inadvertently introducing or perpetuating bias within our work by using AI.

Creating a culture of innovation across GCS

Nearly 7,000 brilliant public service communicators work in government departments, agencies and arm’s-length bodies. Our membership includes professionals spanning multiple disciplines: Data & Insight, Digital, External Affairs, Internal Communications, Marketing, Media, and Strategic Communications. We work across 24 ministerial departments, 21 non-ministerial departments and more than 300 agencies and other public bodies. We serve the public across the United Kingdom and promote our interests overseas through the network of communicators based at embassies and posts around the world.

Many communication teams have led in adopting innovative new approaches to deliver communications. The recent OECD Public Communications Scan of the United Kingdom highlighted that there is ‘an opportunity to elevate all departments to the same high standards and to consolidate promising practices and methods that make for more responsive, inclusive communication’ (OECD, 2024).

To achieve this, we are placing a systematic approach for innovation at the heart of this strategy. The purpose is to ensure that going forward our innovation efforts follow a common approach, to facilitate greater collaboration and knowledge sharing across GCS organisations.

Through this shared approach: we can all encourage and identify new ideas that can drive value, rapidly test these through robust piloting, and ensure we scale the learnings right across GCS, whilst applying our ethical principles throughout every stage.

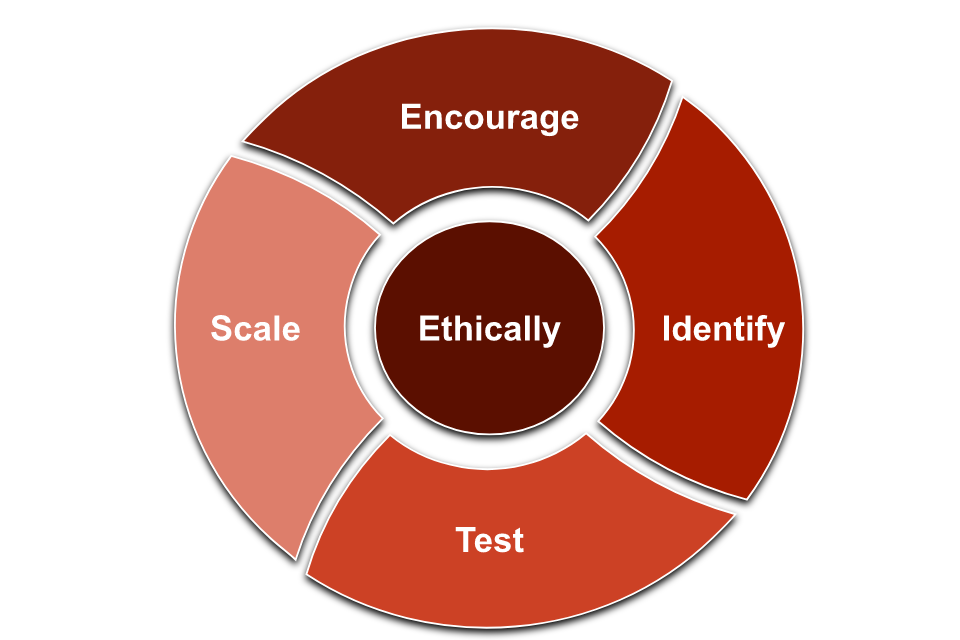

Figure 2 in the strategy shows the systematic GCS approach towards innovation with acting ethically at the centre, namely:

- Encourage

- Identify

- Test

- Scale

Furthermore, for innovation to flourish across our community, it is essential we have a strong, shared culture of innovation and creativity. Ultimately, a culture of innovation is built upon a shared set of behaviours. These should support us to adopt innovative approaches in our work, think creatively, enable us to learn new skills, and provide us with the confidence to take managed risks where appropriate.

During 2023, GCS held a series of workshops open to all government communicators to understand how we can collectively build a shared culture of innovation across our different organisations. These workshops included participants from 18 government organisations, including 10 central departments and 8 arm’s-length bodies. Participants reflected on their understanding of innovation in the context of government communications. Having explored the meaning of innovation, they identified behaviours associated with innovation, discussed barriers to those behaviours, and finally identified the most practical initiatives, tools, resources, and guidance required to enable them.

The findings form the basis of the following toolkit for all government communicators. The purpose is to provide practical support at each stage of our systematic approach to innovation: encourage, identify, test, and scale, ethically.

Encourage

Innovation isn’t merely an option; it is a necessity for government communications to improve the effectiveness and impact of our communications and engagement with the public. To achieve the positive outcomes we are striving for, we must think creatively about how we both overcome long-standing challenges, and keep pushing to deliver more value, through embracing and testing new approaches.

Common barriers that people experience include not feeling like they would be supported to develop an idea, that someone else must have had that idea already, or that they feel their organisation values minimising risk over rewarding change.

“When I speak to Ministers, they tell me they want more creativity and innovation. Our people need to know that we will support them to try something different and we will have their backs if it doesn’t work.”

Simon Baugh, Chief Executive, Government Communication Service

These are all cultural barriers that we can tackle together, by sharing our ideas and supporting new initiatives. We must encourage ourselves to think differently, to try something new, to take small steps or embrace game-changing ideas, to collectively build a shared culture of innovation.

This is why GCS is committed to never penalise trying something new that didn’t work through embracing creativity or innovation.

This is also why we launched Project Spark! – our internal Dragon’s Den style competition to encourage all GCS members to submit their ideas to transform government communications. The GCS central team at the Cabinet Office has then helped develop and pilot the winning ideas, over the last two years.

Wave 1 received 19 ideas from 13 teams over 2 continents, and has led to:

- Developing a new strategic approach towards working with influencers, in the same way we would with any other established channel. Originally pitched by the Department for Culture, Media, and Sport (DMCS), this is especially important for government communications due to the continually changing media consumption habits of our audiences. As a result, GCS launched in late 2023 its new Guidelines for influencer marketing to help all GCS members work with social media influencers in an effective, professional and transparent way.

- Re-imagining weekly grid systems used across government to plan and coordinate government communications. This ambition considered not only data collection, but visualising insights, and sharing the effectiveness of communications to generate continuous learnings and play-forward actions. Originally pitched by the British Embassy in Washington D.C., this has developed into the aforementioned initiatives within this strategy to harness data to improve our impact.

- Exploring the use of ‘attention’ as a metric to improve campaign performance. Originally pitched by a team from the Department for Work and Pensions (DWP), this led to a pilot of an off-the-shelf solution with the Help for Households campaign. This was the first test of its kind for government communications, and demonstrated that whilst attention-based approaches are in their infancy, there is value in continuing to robustly test and at a larger scale. In this light, two further test and learn pilots are underway to assess the value of this innovative approach for government communications.

The competition for Wave 2 took place over the summer of 2023, and was won by a team from the Met Office. They pitched making government communications increasingly accessible for all by embracing new technologies and practices. This has been taken on by the central GCS Innovation Lab team which will pilot projects by May 2024.

Our goal is for all GCS members to draw inspiration from these teams, and reach out to others to collaborate together, advancing new innovative ideas in our own areas of work.

As well as encouraging responsible innovation across government communications, we want to encourage members to develop the skills that enable this, and help them become confident using technology and data in their roles.

This is why alongside the aforementioned ‘AI for Communicators’ course, the ‘Data and Insight for communicators’ course has been made available to members on GCS Advance, our professional learning and development programme.

In this course, members will learn about how to adopt a data-first mindset by embedding data in every stage of our campaign planning. Members will learn how to use insights to inform objectives and how data can help us to reach the right audience for our campaigns. Finally, the course looks at how to monitor implementation of campaigns using analytical tools, using evidence to identify and communicate recommendations.

Identify

Our Horizon Review identified several emerging technologies and applications of data most likely to shape the communications and marketing industry over the next 2-5 years. To ensure we are continuously scanning the horizon for new opportunities, in 2023 GCS launched its new Innovation Hub.

The Innovation Hub brings together leaders from across GCS, technology companies, our cross-government media-buying agency as well as others, to scan the horizon for future trends and develop a pipeline of the most promising new technologies that can be piloted in government campaigns.

The Hub invites tech start-ups to pitch in 15 minutes their idea to improve government communications. Successful and ethical propositions which fit GCS needs, secure an opportunity to partner on our campaigns.

The Hub has already identified several innovative ideas to take forward into a pilot. For example, GCS is trialling a predictive analytics tool that could help digital and campaign teams test how well their creative content attracts attention. Other innovative solutions identified by the Hub are already being scaled across government communications, including the use of non-intrusive in-app audio technology to drive awareness on issues such as social housing and vaccinations.

The work of the Innovation Hub is being shared across GCS. This is to ensure innovative new technologies and approaches identified are considered early on in the communications planning process, and are scaled across government communications to maximise their value.

Test

The biggest private sector advertisers spend around 10% of their advertising budget on innovation. This enables them to test and learn by piloting new technology and techniques. So, starting in fiscal year 2023/24, GCS changed our spending controls to encourage departments and ALBs to spend up to 10% of their existing campaign budget on innovative techniques to pilot, test and learn from.

This enables us to identify promising new innovation to scale across government, ensuring we can continue to use public funds responsibly and judiciously, whilst at the same time seizing new opportunities. This has the added benefit of creating more opportunities for organisations to learn what works well, and what can be changed, to drive their campaigns.

Through this initiative, more than 30 innovation projects are being piloted in the first year. Five themes are emerging from those pilots: new data-driven audience insights, new partnerships and channels, immersive experiences, and campaign optimisations. Some of the projects are new to the piloting organisation, and some are new to government.

A great example is the award winning Every Mind Matters campaign. Using innovative AI technology, the campaign was able to target an audience’s linguistic signals to deliver impactful, co-created content on mental health. This highlighted the positive role AI technologies can play in helping the public access mental health support, and support government communications to reach very specific audiences. This drove a 27% rise in 18-34 year-olds who reported feeling empowered to reframe unhelpful thoughts. In 2024, this technology is being scaled by other departments to enable them to better reach the right audiences, for example on other health issues such as smoking.

We also need the right testing and evaluation frameworks to honestly appraise which tools are really working. We’ve published test and learn guidelines for GCS, using the latest research and scientific rigour to explain how to develop A/B testing, multivariate testing and attribution modelling.

We have also worked with Henley Business School to update the new GCS Evaluation Cycle with a focus on innovation and real-time evaluation. Development of the updated cycle was done in parallel with this strategy for innovation. This was to ensure they are mutually supportive, and provides a joined-up approach for members across GCS. The new cycle places an emphasis on continuous learning and improvement through embracing innovation at every stage of delivering communications for the public. The updated cycle was launched on the GCS website in February.

These resources are designed to support all GCS members to adopt a robust test and learn mindset to their work, and ensure public confidence in our rigorous approach.

Scale

One of the most significant challenges to driving innovation across government communications that our engagement with the membership highlighted, is having the right connections in place to share successes and learnings. Due to the diversity and scale of our GCS organisations, often opportunities are not communicated quickly enough for other teams to benefit, or lessons learned not made easily accessible to inform and shape future plans. Therefore, establishing effective networks for promoting and sharing innovation needs to be a fundamental ingredient of our strategy.

This is also echoed in the recent Public Communications Scan of the United Kingdom, where the OECD noted that ‘GCS could support innovation through the development of communication “sandboxes” (or experimentation labs) and incentivise creative exchange among teams and communities of specialists’.

To achieve this, we can build on our established platform: GCS Connect. This is our members-only online portal for GCS products and services. More than 5,000 GCS members have signed up since November 2022 – allowing them to find others doing similar work to themselves. By March 2025, GCS will create a shared innovation forum on the platform, where case studies covering live activity can be shared, as well as learnings, and recommendations, accessible to all members through their existing user accounts.

In the interim, this will be supported by establishing a new ‘Generative AI in Communications’ community group in the first half of 2024, where teams across government communications will be able to share their applications of generative AI, results, learnings, opportunities to collaborate, and ensure we are joined up in our use.

This will be our network for innovation: allowing people to share what has worked and what hasn’t, to virtually develop and share ideas and to ask for help. GCS Connect will also be the host for both GCS Advance and our GCS conversational tool, Assist, once released in 2024.

Delivering new tools and platforms

We have identified three priority areas for innovation in government communications, to address the challenges brought on by rapid technological advancements and an evolving communication landscape: the application of generative AI, responsible use of data to drive innovation, and greater personalisation to improve our connection and impact with the public.

This is based on the objectives of our GCS Performance with Purpose strategy, and informed by the GCS Horizon Review, and engagement with industry and government communicators.

By focusing our efforts in these three priority areas, we will be able to rapidly scale the benefits across government communications, and to the public. For example, if an AI virtual recruiter can effectively support applicants to join the armed forces, then it is right we explore the potential to scale this technology to also support people to become nurses, teachers, carers, and police officers.

Generative Artificial Intelligence

Generative AI technologies, including Large Language Models (LLMs), are transforming our world. This is especially true for communications and marketing, where the ability of AI to synthesise text, imagery and video has fundamental repercussions for how we can deliver engaging communications for the public.

Our Horizon Review highlighted that generative AI can open up our thinking, enabling us to consider new perspectives on a topic or identify new approaches to an issue. It has the potential to unlock a new era of hyper-personalisation. Providing communicators with the ability to produce fine-tailored communications and messages for different groups of people in a way that resonates with them. This is at a scale which would have been cost prohibitive a short time ago.

Generative AI technology, capable of understanding and generating human-like text, opens up the ability to craft messages in multiple languages and increasingly tailor them to cultural nuances. This can support government communications to break through language barriers and better reach diverse audiences. These capabilities also allow for the creation of hugely varied digital content at scale. This has the potential to enhance engagement across different parts of society that can be hard to reach through traditional approaches.

Conversational AI tools can also enable instant, scalable interactions with government for the public, offering services and information in a way that is tailored to their unique needs. Communications which are relevant and which resonate are more likely to increase engagement with the public, driving awareness of our communications, and access to public services.

AI can also enable us to be more productive, reducing the time taken to complete routine tasks, and enabling us to apply our industry-leading standards and best practices more effectively and efficiently.

One of the core principles in the Civil Service Code is honesty – the need to set out facts and issues truthfully – which is why the adoption of generative AI to support government communications must be approached with caution. AI technologies currently have no concept of the truth, and outputs are inherently prone to the biases or discrimination of the data they have been trained on.

For these reasons we believe it is right to adopt generative AI to support us in delivering government communications. But to do so responsibly, GCS has developed its first generative AI policy to harness the benefits, deliver increasingly effective communications for the public, and maintain our ethical standards.

This policy is designed to guide all government communicators in the responsible and transparent adoption of today’s generative AI in our work. It sets out where we believe it is right to use generative AI for public benefit, and importantly, how we will act transparently in our use.

The policy was developed with input from Directors of Communication and Heads of Discipline from across GCS. We also engaged with other government organisations in its development, such as the Responsible Technology Adoption Unit (RTA), key technology partners and agencies, as well as academia.

It sets out several overarching principles for responsible use of generative AI by all our members, including:

- Government communications will not use generative AI to deliver communications to the public without human oversight, to uphold accuracy, inclusivity and mitigate biases. Human oversight will mean that human qualities, such as curiosity, critical thinking, and empathy, remain part of how we deliver government communications.

- Government communications will always uphold factuality in our use of generative AI. This means not altering or changing existing digital content in a way that removes or replaces the original meaning or messaging.

- Government communications will not apply generative AI technologies where it conflicts with our ethical values or principles, for example, as set out in the Civil Service Code, or the Government Publicity Conventions.

To use generative AI in a manner that is in line with our ethical standards, we need to act transparently in our use of the technology. This is why we will also clearly notify the public when they are interacting with a conversational AI service rather than a human.

This new policy will ensure a consistent approach across government communications. The public will also be able to understand, trust and benefit from a seamless experience.

We recognise that this is a fast-evolving area, therefore GCS will continue to work closely with other government organisations to inform our use of this emerging technology, and adapt our approach accordingly. This is to ensure our use of generative AI remains in accordance with the latest official government guidance, as well as industry standards, and public expectations.

Collaborating with generative AI

We recognise that today’s generative AI needs a human to guide and check its work. Using generative AI can also enhance our own skills and talents. Recent studies have shown that collaborating with AI can help professionals complete tasks more quickly and to a higher standard. Importantly, these studies indicated that those who are less experienced are benefiting the most from the technology.

AI as an ‘assistant’ is therefore one of the greatest areas of potential – which is why GCS will launch a government communication focused AI-powered tool for its members in 2024.

The project aims to put AI technology in the hands of GCS members, giving them access to the benefits of using generative AI in their roles, and empowering them to improve the effectiveness and efficiency of government communications. To achieve this, we are creating a private and secure conversational AI tool for GCS members, tailored to government communications use cases.

To ensure Assist can provide government communicators with high quality responses, it needs high quality inputs. Our aim is for the tool to contain GCS professional guidelines and frameworks, communication case studies, proprietary audience data and insight, as well as ethical and regulatory guidelines, to enrich and produce high quality outputs.

As a result, GCS members will be able to prompt the tool to generate draft text, plans and ideas to enhance their own thinking – inputting data securely in the knowledge that it will not be accessible outside of government. It will help members to complete many time-consuming tasks more quickly, creating more space to devote time maximising their talents on creative and collaborative tasks.

Uniquely, Assist includes pre-engineered prompts that are built around common communications use cases. This enables members who may have little or no expertise of using AI powered tools to also benefit from collaborating with this new technology.

In late 2023, a prototype was developed and piloted with the central GCS team at the Cabinet Office to evaluate the quality of outputs, and better understand how different teams can benefit from collaborating with AI, no matter what area they work in.

We will launch the next generation tool on GCS Connect in Spring 2024. This will enable access for an initial group of members across government to test and feedback, before rolling out wider. A phased approach to the tools roll out will ensure we can robustly evaluate the impact of the tool as it scales, in a controlled manner. This version of the tool will integrate GCS’s publicly available standards and frameworks to further optimise responses. This will ensure users are receiving outputs that incorporate GCS best-practice as standard.

Our ambition is to continuously develop and improve Assist, gradually opening up access to more members when possible, and introducing more advanced features driven by our user’s needs. Our priority will be to introduce data-rich capabilities, enabling members to benefit from the ability to securely tailor their conversations using internal GCS data.

Our vision is that members will not always need to remember every GCS model of best practice or functional standard, as that will be built into the outputs of Assist. The ambition is that they can request a comms plan and it will use our OASIS model. If asked for evaluation measures it would use the latest GCS Evaluation Cycle. If you want to develop a response strategy at pace during a crisis, it will use our Crisis Communications Operating Model.

AI also has a role to play in improving how we understand the audiences we need to reach. The ability of AI technologies to rapidly analyse large volumes of text and surface insights which were previously hidden, or cost-prohibitive to identify, promises a step change in unlocking value from our research data. Therefore, the central team will develop and test tools that can enable GCS to unlock new insights from our research data. This will enable us to increasingly create a shared view into key research topics.

Driving skills in generative AI

In the workplace, as in society, we have only just begun to realise the potential of AI. The pace of change in 2023 has been rapid and there are many opportunities, as well as challenges, we face when adopting this technology. This is why, alongside our new generative AI policy and Assist, we have launched a bespoke ‘AI for Communicators’ course on Civil Service Learning. This will also be available through our professional learning and development programme, GCS Advance, by April 2024.

In this course, members will be able to hear first-hand from organisations such as the National Cybersecurity Centre (NCSC), the Royal Navy, and external thought leaders discussing the new technologies that underpin the growth in potential of AI and large language models (LLMs). The course then moves from theory to application, where members will learn from experts in GCS about the ways we can use these tools in their roles today. Finally, the course discusses the current limitations of these models. This is so all members can start working effectively and with confidence, with a shared understanding of the opportunities, risks, and best practices of using generative AI.

Responsible use of generative AI is at the heart of our approach, and its use comes with inherent risks. This is why all members will need to have completed this bespoke course before they can access Assist once launched.

Tackling horizon challenges head on

The emergence of generative AI has also brought with it risks that are outside of our direct control, and which are constantly evolving. Recent developments in AI technology have placed the ability to create realistic synthetic content, and the ability to share it at scale, within the reach of anyone with a laptop and access to the internet.

Our Horizon Review highlighted the potential impact of this on our ability to deliver communications that are trusted by the public. As the organisation for Economic Co-operation and Development’s (OECD) recently highlighted in their Trust Survey, the public’s trust in institutions and information at large is declining. If the public doubts the legitimacy of the communications they are receiving, they are likely to trust the messaging less. The rise of AI-enabled technology such as deep fakes, which are able to imitate or spoof government communications and individual messengers, exacerbates this and poses a key challenge for how we can ensure the public recognise and trust our communications.

Therefore, we also need to adopt an experimental approach to addressing some of the most pressing challenges that the arrival of AI has brought for government communications.

The GCS RESIST-2 toolkit sets out steps all communicators can take to identify and tackle mis- and dis-information, and reduce its impact on audiences. However, for the public, identifying fabricated or manipulated content generated by AI is becoming increasingly difficult as it becomes more realistic, representing a renewed challenge to trust in institutions in democratic societies.

People should be able to trust what they see and hear from Government channels. This is why GCS will support the Department for Science, Innovation, and Technology (DSIT) in their work to explore what proactive steps can be taken to protect the authenticity of the government’s voice for the public.

This is also why we clearly set out in our new AI policy that GCS will always secure written consent from a human before their likeness is replicated using AI for the purposes of delivering government communications. In the limited number of cases we currently expect, a record of this will be made available to the interested public via an official channel, for example, listed on the GCS website. This is to ensure that legitimate government communications can be discerned from deep-fakes or other mis/dis-information. Our aim is to mitigate against any unintended consequences that may come from greater use of AI avatars in government communications.

Currently, there is no single effective method to tackling this, and our approach will focus on exploring technical solutions. For example, this could include exploring the use of deep-fake detection tools, the application of digital watermarking and blockchain technologies, as well as industry-led initiatives like the Content Authenticity Initiative (CAI).

This is also why GCS will launch the first ‘Horizon Challenge’ in 2024. The purpose is to generate a pipeline of innovative approaches to the most complex emerging issues facing government communications, which can then be taken forward to test & scale. The first challenge will invite a multidisciplinary group of GCS members and external experts to participate in a hackathon style event at the Cabinet Office. Participants will be given a series of challenges which face government communications, and tasked with designing a GCS approach to them. For example, horizon challenges could include:

- What are some concrete ways that government communications can seize the benefits of decentralised social media networks and interoperable platforms?

- How can we ethically use ambient technology, such as the internet-of-things, and ever-increasing connectivity (5G+), in real time to support government communications during a crisis?

- How could we practically protect the authenticity of the government’s voice in the era of AI and deep-fakes?

- How do we ensure the public has accurate information on government services, if they are less likely to visit official channels and use AI services instead to access this information?

The objective will be to identify concrete steps that GCS can practically and reasonably take to begin addressing important issues like these. Our ambition is to leverage our collective scale and external expertise to drive our forward thinking, as well as shaping the discussion in these areas.

Responsible use of data to drive innovation

The responsible use of data can power increasingly relevant messaging for the public, and for government communications to be delivered in an increasingly efficient way for the taxpayer.

Increasingly data-driven communications can empower us to better understand and focus on the needs of individual groups, or communities. This can support the public to be better informed about services which are relevant to them, who in turn are empowered to access the services they need.

Furthermore, an increasingly data-driven approach can establish virtuous feedback loops, driving continuous improvement in how and where the public receives government communications, so that they reach the right people, at the right time, in the right place, to have the most impact.

The use of data and insights in informing how we, as communicators, plan and evaluate our activity has become more critical than ever before. Data and insights are the key to evidence-based decision-making. They help us understand the needs, concerns, and aspirations of those we are trying to reach, ensuring our messages resonate and our campaigns have maximum impact.

Today’s digital landscape is dominated by media and search platforms that provide users with increasingly tailored content, powered by data. This has made it possible for advertisers to reach different audiences with specific messages at a scale thought unimaginable just a few years ago. In this crowded environment, impactful communications need to be increasingly relevant and timely. To better achieve this, responsible use of data is our ally.

Using data appropriately and ethically has the potential to support greater democratic engagement, through more relevant messaging and engaging experiences for the public. However, the technologies and uses of data available also raise important questions around privacy and ethics.

The Organisation for Economic Co-operation and Development’s (OECD) Trust Survey found that 52% of UK respondents characterised the government as likely to only use their personal data for legitimate purposes, with 32% characterising this as unlikely (OECD, 2022).

It is therefore imperative that we are transparent in our approach, and act as responsible stewards for the data we use to deliver government communications.

Our use of data will always be guided by our legal responsibilities and ethical values. It will always have clearly defined purposes, valid justifications, have consent where it is required, and be used where it will not conflict with our ethical standards. GCS also reaffirms our approach of using the ‘least data by default’, which guides our use of data only when it is necessary and when it is justified to do so, in order to provide public good and value for the taxpayer.

Nevertheless, our Horizon Review highlighted important opportunities that come from better compliant sharing and use of our first-party data, defined as data that organisations have collected themselves and control.

These opportunities include providing more tailored and efficient interactions for the public. This can reduce the need for the public to provide the same data to the government across multiple interactions. This can improve the public’s experience interacting with the government, and provide greater efficiencies and cost savings for GCS.

Leveraging our first-party data better can also unlock better use of advanced measurement and modelling solutions (such as optimisation, conversion, performance tools). These are increasingly available in the market and being powered by AI. They offer alternatives to existing third-party cookie-based solutions which are rapidly depreciating, and have led to the emergence of new alternatives, designed to be increasingly privacy focused.

It also raises the bar for effective evaluation of government communications. Proving the effectiveness of behaviour change campaigns and their link to longer term outcomes, already a key challenge for us, will be made more challenging by a more fragmented communications space. However, better data sharing, if done responsibly and at greater scale, does offer the potential to more accurately assess the impact that our campaigns are having. This will enable us to better optimise our communications for the public, and also deliver greater value for the taxpayer.

Just because data may be first-party in nature, does not mean its use is automatically privacy safe. This is why from April 2024; GCS is introducing a three-step approach to empower members to responsibly use first-party data in their communications activity:

1. Map and discover

- Understand the landscape of available first-party data, starting in your organisation. For example, this data could be audience research, consented or modelled website engagement data, or anonymised outcome data. Keeping in mind that not all of this will be appropriate, or of a sufficiently high quality, to drive your communications activity.

2. Secure consent and communicate

- Understand what permissions are in place, or that need to be secured, before first-party data can be used in a privacy-compliant manner. Usage and safeguards should be clearly communicated within Privacy Notices, but we should also test other innovative ways to clearly, and in plain language, communicate our approach.

3. Minimise and innovate

- Determine the minimum level of first-party data you need to use to achieve the objective early in the planning stages. Use the GCS Test & Learn Guidelines to help you responsibility and robustly test your innovative new uses of data.

- Government communications are rightly scrutinised more than other communications activities within the industry. This highlights the continued importance of our ‘least data by default’ approach. However, this should not prohibit the testing of innovative new approaches. Responsible testing in accordance with our ethical guidelines is vital in order to assess the value that novel uses of first-party data may have, and our ability to justify them for the public good and deliver taxpayer value.

With these new safeguards in place, our aim is that the public has increasing confidence in how we act as responsible stewards for the data we use to power government communications.

This is in addition to supporting government communicators to become increasingly confident in testing innovative new ideas, prioritising the piloting of privacy-first solutions, for public good.

For example, this can include the application of Privacy Enhancing Technologies (PETs). PETs are tools and methods designed to help protect personal information from unauthorised access or misuse. They enable the secure processing of data while preserving confidentiality and anonymity, helping to maintain privacy compliance and reduce risks associated with processing data.

Greater personalisation to reach the public with impact

Personalisation is all about communicating the right message, in the right place, to the right person, at the right time for them. By focusing on delivering increasingly personalised government communications, our aim is to speak to specific audiences in a way and place that is relevant for them. For the public, this means being provided more timely and helpful information about the issues relevant to them.

The level of personalisation that is appropriate for government communications will be dependent on the message, intended audience, available technology to deliver, and the ethics of the approach.

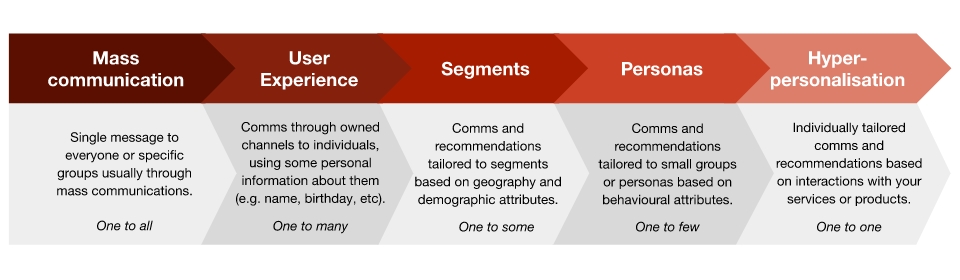

Figure 3 provides a visual of the different levels of personalisation possible within communications, and is based on current industry frameworks and approaches, as shown below.

Mass communication (one to all)

- Single message to everyone or specific groups usually through mass communications.

User experience (one to many)

- Comms through owned channels to individuals, using some personal information about them (e.g. name, birthday, etc.)

Segments (one to some)

- Comms and recommendations tailored to segments based on geography and demographic attributes.

Personas (one to few)

- Comms and recommendations tailored to small groups or personas based on behavioural attributes.

Hyper-personalisation (one to one)

- Individually tailored comms and recommendations based on interactions with your services or products.

As highlighted in our Horizon Review, the arrival of AI and the increasing availability of data have led to new opportunities to deliver personalised communications and experiences. These opportunities can enable government communications to connect with people in more ways than through mass communication (at the left of this chart) when it is appropriate and ethical to do so.

One example of this is the successful introduction of AI-powered chatbots embedded within campaign websites. These can provide personalised conversations supporting people through the recruitment process for a public sector role. We have seen this technology reduce the burden on existing call centres by 40% for recruitment campaigns. Uniquely, personalised interactions like this have also provided candidates with a private space to ask candid questions about whether a role is right for them, ensuring that the recruitment process can address their unique needs.

When there are benefits to the public to introduce more personalisation, and it is ethical to do so, it is right to assess whether the benefits can be scaled across government. Therefore, from April 2024 we will work with our major public sector recruitment campaigns to evaluate the use of chatbot technologies, and scale this technology where there is value and it is ethical to do so.

However, chatbots and similar types of personalisation may not always be ethical or appropriate, for example on certain health-related topics. Therefore, we should also explore other innovative technologies that can provide tailored messaging at points in the middle figure 2. For example, this could be providing the public with tailored messaging based on contextual information. This involves using factors such as a website’s language, topic, or other innovative sources of data, to control the placement of communications.

Our Horizon Review identified Customer Data Platforms (CDPs) as one such technology. CDPs are used throughout the wider communications and marketing industry, and enable teams to securely bring together their first-party communications data from a range of sources into a secure repository. This helps them to understand their customers better, and support them to deliver more tailored and relevant communications across various channels.

As the aforementioned Trust Survey from the OECD highlighted, almost half of the UK public has concerns regarding legitimate use of data by the government. In this light, it is even more important that we validate and justify our use of first-party data to support more tailored and effective government communications, before adopting at scale. Therefore, the central GCS team will work with our major public sector recruitment campaigns to identify opportunities to robustly pilot technologies such as CDPs. Our aim will be to validate the effectiveness of this technology in driving public sector recruitment.

Alongside greater collaboration among communications teams across government, the central GCS team will deepen our existing collaboration with the Government Digital Service (GDS) who delivers the GOV.UK platform. In addition to the Central Digital & Data Office (CDDO), who puts into place the conditions needed for the government to achieve digital, data and technology transformation at scale. Our aim is to streamline the public’s experience engaging with the government across different websites and digital channels, making it easier for the public to search, understand, and access the right services for individual needs.

GCS will also collaborate closely with Crown Commercial Services (CCS) to ensure requirements of future cross-government commercial media and research frameworks meet the future collective needs of GCS. For example, by embedding increasingly standardised approaches in how we harness communications data across our strategic suppliers, and how our suppliers can use AI safely in their delivery of media and communications services for HMG.

In conclusion, we all have a role to play in advancing the effectiveness, efficiency and impact of government communications, through responsible innovation and use of data.

We will all be able to deliver more engaging and meaningful communications to the public if we harness the power of cutting-edge technology, embrace data as our ally, and build a shared culture of innovation.

This strategy isn’t only about cutting through in an increasingly complex and noisy communications landscape – it is about thriving in an ever-evolving landscape, where the public’s expectations in their experiences connecting with government run high.

As we embark on this transformative journey, let us remember that responsible innovation and use of data is the means by which we can build trust, drive our effectiveness, and make a lasting positive impact on the lives of those we serve.

Delivery Roadmap

| Index | Activity | Timeframe |

| Enabling responsible innovation | ||

| IDS1 | Launch of the new GCS Ethical Framework for Responsible Innovation in private beta, piloting with an initial cohort of GCS campaigns, and engagement with external stakeholders. | March 2024 |

| IDS2 | Test and evaluate new ways to communicate clearly, in plain language, how we use technology and data to deliver government communications. | April 2024 onwards |

| IDS3 | Update the GCS SAFE Framework, to adapt the latest changes in the communications landscape, and increase its applicability to evolving digital platforms. | September 2024 |

| Delivering new tools and platforms | ||

| IDS4 | Publish the new GCS AI Policy for all government communicators. | Live |

| IDS5 | Launch of the new ‘AI for Communicators’ course on Civil Service Learning and GCS Advance, providing all members with a shared understanding of the opportunities, risks, and best practices. | Live |

| IDS6 | Launching the private beta of Assist for members in GCS Connect. This will be followed by a phased roll out to GCS members. This is alongside exploring the use of AI technologies to unlock insights from research data. | April 2024 onwards |

| IDS7 | Launch the new ‘Horizon Challenge’ to develop practical and applicable roadmaps to address future challenges to government communications. | H2 2024 |

| IDS8 | Work with our major public sector recruitment campaigns to evaluate the use of chatbot technologies, and scale this technology where there is value and it is ethical to do so. | Complete by March 2025 |

| IDS9 | Test and evaluate technologies that enable greater personalisation within communications, such as CDPs, in partnership with campaigns that can provide the greatest opportunity to robustly pilot the potential value for the public. | Complete by March 2025 |

| Creating a culture of innovation across GCS | ||

| IDS10 | GCS Innovation Hub: delivering a pipeline of innovative new technologies for cross-government communication teams to test within campaigns. From pitch to pilot, this will take no more than three months. | Live |

| IDS11 | Complete Project Spark! Wave 2 pilots, and begin scaling across government communication. | May 2024 |

| IDS12 | Establishing a new ‘Generative AI in Communications’ community group, where teams across government communications can share their applications of generative AI, results, learnings, and opportunities to collaborate. | H1 2024 |

| IDS13 | Creating a shared innovation forum on GCS Connect, where case studies covering live activity can be shared, as well as learnings, and recommendations, accessible to all members through their existing user accounts. | By March 2025 |